Free way for killer robots: powers like the US and Russia veto at the UN the proposal to limit the use of weapons that are not controlled by humans

Free way for killer robots: powers like the US and Russia veto at the UN the proposal to limit the use of weapons that are not controlled by humans

There was no agreement. Because there was not, there was not even an agreement that there was no agreement. Last week, the Convention on the Prohibitions of Certain Weapons (CCW, for its acronym in English) closed after 8 years of heated debates, long discussions and painstaking studies commissioned by entities of all kinds.

It's not just any commission. Behind such a euphemistic name hides the effort of nearly a hundred countries to regulate what is known as Lethal Autonomous Weapons Systems (LAWS, for its acronym also in English). Another euphemism to talk about autonomous weapons.

They can be drones, but also, for example, missiles guided by artificial intelligence. Over the years, they may even take on a humanoid appearance.

They share one requirement: in a matter of seconds, guided by technologies such as artificial intelligence and with little or no human supervision, they are able to define a target and kill it.

In other words, they are machines that decide who lives and who dies.

Vertiports and air taxis, the next transport revolution led by Ferrovial in Spain

Not science fiction. After decades of development, thanks to technologies such as AI itself or image recognition systems, there are already reports that attest to its use in armed conflicts.

For example, recalls the New York Times, last March UN investigators claimed that government-backed forces in Libya had used a drone called the Kargu-2 against militias.

Made by a Turkish defense contractor, the drone tracked and attacked fighters as they fled a rocket attack, according to the report, which did not make it clear whether any humans were in control of the drone's controls.

In the 2020 war in Nagorno-Karabakh, the newspaper also recalls, Azerbaijan used missiles against the Armenian army that basically spend time hovering in the air until they detect the signal of an assigned target and go to it.

And the list goes on and on. So much so that this year in the CCW, for the first time, there was a broad consensus to establish a protocol against these weapons.

But this is not enough. Given that any decision that comes out of these working groups must be the result of full consensus, that is, it must have the support of absolutely all its members, it is not enough simply for the majority to want a protocol for it to go ahead.

It's not even enough that the majority is overwhelming: as long as a single country disagrees, everything remains as before.

That's exactly what happened. The US, Russia, Israel, India and the UK, mainly and each for their own reasons, have refused to even start negotiating to stop this technology.

The conference concluded with only a vague statement on the consideration of possible measures acceptable to all. Wet paper.

This amazing robotic hand can hold an egg without breaking it, pour drinks and squeeze beer cans

Unlike what has happened in other CCW meetings that, for example, have prohibited the use of blinding lasers or antipersonnel mines, no firm decision will come out of this sixth meeting.

This, after 40 countries, according to Humans Right Watch, called more or less clearly during negotiations for new international legislation to prohibit and restrict autonomous weapons systems.

@Barnacules you never said how to open regedit btw

— Benji - Po Wed Nov 23 10:53:09 +0000 2016

Among them are Algeria, Argentina, Austria, Bolivia, Brazil, Chile, Colombia, Costa Rica, Croatia, Cuba, Ecuador, Egypt, El Salvador, Spain, the Philippines, Ghana, Guatemala, Iraq, Jordan, Kazakhstan, Malta , Morocco, Mexico, Namibia, Nicaragua, Nigeria, New Zealand, Palestine, Pakistan, Panama, Peru, Holy See, Sierra Leone, Sri Lanka, South Africa, Uganda, Venezuela, Djibouti and Zimbabwe.

To them should be added others that are somewhat more ambiguous, such as China, which was opposed only to the application of these weapons, or Portugal, which limited itself to asking countries to include uses contrary to human rights of these weapons, a claim considered insufficient by most.

These petitions have met with a fair amount of interest. For starters, the New York Times singles out what it defines as "war planners."

These are companies that are part of the arms industry, offering the promise of keeping soldiers out of harm's way with autonomous systems like drones: white-collar warfare.

That's why the top US delegate at the conference, Joshua Dorosin, went as far as proposing a non-binding code of conduct.

Glovo launches its first autonomous delivery robots in Madrid and social networks explode with Neo-Luddite messages: "If I find it, I'll kick it"

Behind this proposal, which was described in the best of cases as a strategy to delay the implementation of a firm protocol, are the interests of the US military.

It works with companies like Lockheed Martin, Boeing, Raytheon, and Northrop Grumman on projects that include the development of long-range missiles that detect moving targets, swarm drones that can identify and attack a target, and automated missile defense systems. .

They are old acquaintances from the army. The first, reports the journalist Naomi Klein in her book The Shock Doctrine, already in the 90s he was in charge of the information technology departments of the US Army, and Northrop is the manufacturer of the famous B-2 Spirit bomber, capable of to penetrate anti-aircraft defenses.

Goal: stop killer robots

The issue has even reached Silicon Valley. In the summer of 2018, Google had to backtrack on what was then known as Project Maven, a company deal with the Pentagon under which the US military could use AI developed by the tech giant for military weapons.

The public scandal and discontent among employees then forced the company to draft an ethical code that would clarify the possible uses of its AI and remove the shadow of war from the company.

Among the people, however, the idea remained that there are countries willing to associate with as many companies as necessary to develop their technology to kill.

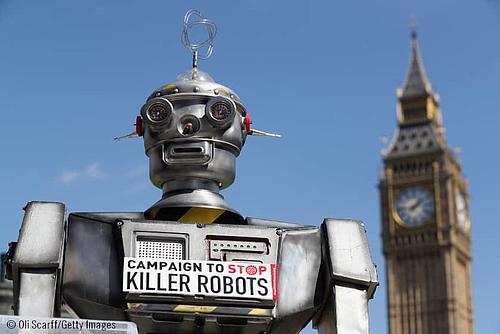

This is exactly the kind of fear that led to the birth of Stop Killer Robots in 2013, an organization that delivered 17,000 signatures to the convention via French ambassador Yann Hwang calling for limits on automatic weapons.

On the one hand, Stop Killer Robots sees it as morally reprehensible to assign lethal decision-making to machines, regardless of their technological sophistication.

On the other hand, they have doubts about its effectiveness. Can they really differentiate in all cases and with no margin of error a combatant from a child, someone who carries a weapon from someone who does not?

And even further: can machines always distinguish a hostile combatant from someone who wants to surrender?

"Autonomous weapons systems raise society's ethical concern to replace human decisions about life and death with sensor processes, computer programs and machines," Peter Maurer, president of the International Committee for Autonomous Weapons, told the conference. Red Cross and outspoken opponent of killer robots.

Human Rights Watch and Harvard University had previously addressed the issue in a 23-page report whose conclusions were clear.

Mercedes gets ahead of Tesla by obtaining autonomous driving level 3 approval in Germany: here's what it means

"Robots lack the compassion, empathy, mercy and judgment necessary to treat humans humanely, and cannot understand the inherent value of human life," the groups argued in a briefing paper for support their recommendations.

“The absence of a substantive outcome at the UN review conference is a wholly inadequate response to concerns raised by killer robots,” said Steve Goose, arms director at Human Rights Watch.

Now, the ball is back in the court of the countries. Among disarmament advocates, the feeling is beginning to spread that these commissions are not a valid instrument for legislating something as serious as autonomous weapons.

Those who argue this include, for example, Daan Kayser, an expert on autonomous weapons at PAX, a peace advocacy group based in the Netherlands: "The fact that the conference could not agree even to negotiating on killer robots is a really clear sign that the CCW isn't up to it," he told the New York Times.

Noel Sharkey, an expert in artificial intelligence and chairman of the International Committee for Robot Arms Control, has been even more forceful. In his view, it is preferable to directly negotiate a new treaty than wait for further deliberations by the CCW.